In the spirit of continued innovation, this year GoDaddy's annual internal hackathon focused on Generative AI to accelerate collaboration and incubation of this transformative technology. The hackathon brought together teams from across the GoDaddy technical community to explore creative solutions to real-world challenges using generative AI. The Copy AIchemists team, comprised of Chad Neff, Nick Scuilla, and Saatvik Arya, explored integrating Large Language Models (LLMs) into the workflow of content editors.

GoDaddy is experimenting with creating new experiences using headless cloud CMS. This move is allowing us to streamline content creation, reduce infrastructure maintenance, and allows us to easily extend the editor using a variety of SDKs.

Our goal for the hackathon was to increase efficiency for content editors by incorporating generative AI suggestions into the CMS. We also used GoDaddy's internal Content-as-a-Service (CaaS) which provides a centralized platform querying different LLMs. Our prototype won the 'Data Overload' category at the hackathon and elevated the standards of content editing within the CMS.

Problem

With the goal of leveraging CaaS and CMS extensions in a 48 hour window, we pinpointed four challenges that hinder the efficiency and effectiveness of content editors.

Legacy systems migration: Migrating content from on-prem CMS to a headless CMS. Our challenge was that a very limited set of top-level domains (TLDs) (like .com) had Content API responses with React components. Most were still using legacy components which didn't have corresponding content types in the headless CMS. This made it difficult to migrate content between the two platforms, due to incompatible component structures.

Web accessibility and search engine optimization (SEO): Automating the generation of alt text for images. This is vital not only for meeting web accessibility standards but also for enhancing SEO. The manual creation of descriptive alt text is time-consuming and can be inconsistent, highlighting the need for an automated, reliable solution.

SEO optimization: Efficiently managing SEO fields, including keywords, titles, and descriptions, was another significant challenge. These elements are crucial for improving search visibility and driving organic traffic, yet they require a nuanced understanding of SEO best practices, which can be labor-intensive and complex.

Text generation within CMS: Seamlessly generate high-quality, and contextually appropriate short-form text directly within the CMS. This would allow content editors to rapidly produce creative and relevant text without needing to leave the CMS environment.

By addressing these challenges, our solution aimed to streamline workflows, enhance content quality, and improve overall productivity for content editors.

Solution

1. Streamlining content migration

Moving TLD pages from an on-prem CMS to headless CMS was a challenging task due to the differences in design languages of the components used in both systems. Most TLDs in the on-prem CMS use legacy Razor components, while the headless CMS uses modern React components. This leads to inconsistencies in the Content API responses, making it difficult to migrate content between the two platforms.

The content in the on-prem CMS is exposed through Content API (a REST API) which can be accessed for each content item individually, or accessed to get all content items for a page path. Here is an example of a Content API call for viewing CMS data used to render https://www.godaddy.com/tlds/com-domain as https://content.godaddy.com/godaddy/sales/tlds/com-domain?format=reactv2.

We aimed to automate the migration process by using LLMs to generate marketing copy for TLDs based on existing Content API responses. This allowed us to generate marketing copy for TLDs that didn't have Content API responses with React components. This automation reduced the manual effort required to migrate content, ensuring a smooth transition from on-prem CMS to headless CMS.

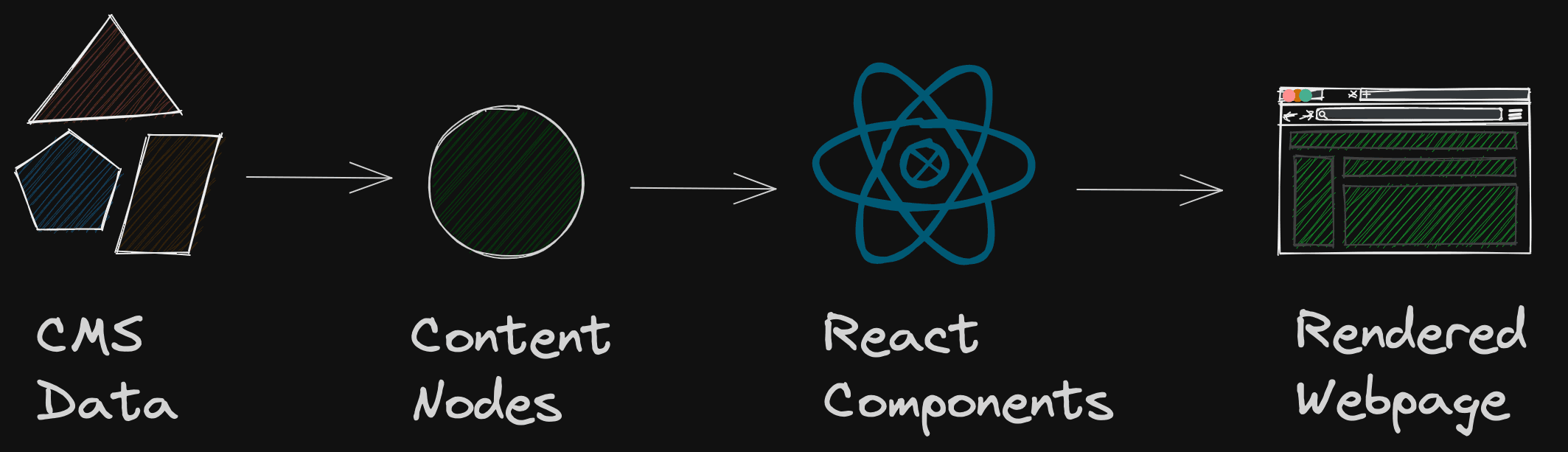

Generating a page to be rendered using a Gasket based rendering layer requires first transforming the Content API response into headless CMS entries. These entries would then be serialized to content nodes JSON which would be finally transformed into React JSX.

The following diagram illustrates the flow of rendering a webpage from headless CMS JSON response using ContentNodes:

// Example of a single ContentNode

[

'ReactComponent',

{

title: 'Hello World',

buttonText: 'Call to Action',

}

]We used the following system prompt to generate structured JSON responses:

system: you are a marketing copywriter. you will be provided an API response for a Top Level Domain (TLD). you will parse the API response for marketing copy and create marketing copy for the new Top Level Domain (TLD). you will then return the API response with the new marketing copy. only return valid JSON. don't include any other text.

Using the system prompt, we were able to reliably generate structured API responses with contextually relevant marketing copy for TLDs. This also allowed us to produce tailored marketing narratives in a mocked API response in JSON format. Looking ahead, we could also incorporate OpenAI function calling to further simplify system prompts.

After JSON responses were generated, we transformed the on-prem entries into headless entries. This process involved mapping fields from on-prem CMS to the headless CMS, ensuring that the content structure and metadata were preserved. By automating this transformation, we streamlined the migration process, reducing manual effort, and minimizing the risk of errors.

The following diagram illustrates the workflow for migrating content from on-prem CMS to headless CMS using our application:

An existing Content API response for a .com TLD https://content.godaddy.com/godaddy/sales/tlds/com-domain?format=reactv2 can be added to the input context window for the prompt. Then based on the system prompt and new TLD input, relevant marketing copy is returned as a structured mock API response. The diff viewer allows the editors to view changes which should reflect only marketing changes.

Content API response for .com TLD is used as input context as it uses the modern React components. Most of the TLD pages use legacy Razor components like the following .ski TLD.

The legacy TLD pages don't have context-aware marquee images. We incorporated a DALL-E prompt to generate marquee images tailored to each TLD. This feature also allows editors to regenerate images if contrast issues arise, such as the following example.

2. Automating alt text for images

Alt text is crucial for ensuring web accessibility and improving SEO by describing images to search engines and visually impaired users. Currently, content editors can add images from a digital assets manager using the DAMImage content type. We added a custom alt text field which allows the editor to generate captions from the image URL. The captions for the image are generated using Amazon Rekognition which is abstracted by the CaaS API. The editor also has options to choose between the generated captions' lengths. Should there be any inaccuracies or nuances that the generated caption does not capture, the editor has the full capability to modify the generated alt text.

3. Optimizing SEO fields

Optimizing content for search engines is a nuanced process, reliant on the careful curation of SEO-specific fields such as keywords, titles, and descriptions. The challenge lies in sifting through entries to find references, analyze text, and distill this information into SEO elements. This labor-intensive task not only demands significant time investment, but also carries the risk of human error.

We've revolutionized this process by harnessing the headless CMS API, which aggregates all relevant entries linked to a page, serving as a comprehensive context for generating SEO fields. Our approach capitalizes on the power of LLMs to analyze and summarize these entries. By feeding relevant data from linked entries into tailored prompts, we enable the LLM to generate well-structured outputs, such as comma-separated values for keywords, coherent meta descriptions, and apt titles.

This system streamlines the SEO optimization workflow, reducing the manual effort involved. By automating the extraction and formulation of SEO fields, our solution not only enhances productivity, but also elevates the accuracy and relevance of the metadata, bolstering the content's visibility and ranking potential in search engine results.

4. Enabling direct interaction with LLMs

To empower content editors with the tools to craft high-quality, short-form text without ever leaving the CMS, we've pioneered a seamless integration with CaaS. This feature streamlines the creative process, producing text that aligns with the content's context and editorial needs.

We configured the CMS app to resonate with a brand profile to maintain a consistent brand voice and uphold core values in LLM's responses. Editors can generate text that not only fits the content's context, but also resonates with the brand's identity, ensuring a coherent voice across all published materials.

Next steps

Having established a functional prototype during the hackathon, we explored further improvements to our solution. We identified several areas for improving the user experience and enhancing the capabilities of the system:

Semantic caching of prompts: Semantic caching will recognize the intent behind prompts and reuse previously generated responses when appropriate, reducing latency and improving system responsiveness. This will not only expedite the retrieval of frequently used prompts, but also decrease repetitive computations, making the generation of content more efficient.

Generating embeddings for contextual relevance: We plan to automate the generation of embeddings from the content stored in both on-prem CMS and headless CMS platforms. These embeddings will create a compact and searchable layer that enhances contextual relevance for prompts. Furthermore, embeddings enable advanced search functionalities like semantic search, which can interpret the intent behind queries to deliver more accurate and contextually appropriate results. This boosts editorial productivity and elevates the quality of the content management system.

Encouraging adoption with preset prompts: To encourage widespread adoption, we’ll introduce preset prompts for various content types while still allowing editors the flexibility to craft custom prompts. This dual approach ensures that editors can quickly produce content with trusted defaults and also have the space to innovate when unique or new content requirements arise.

Intuitive contextual editing: Lastly, an intuitive interface for adding other entries in context will be developed. This initiative includes the development of an interactive prompt editor. This powerful tool will allow content editors to reference other entries directly within the prompt, streamlining the process of generating contextually rich content.

These improvements are designed to improve contextual relevance of generated content, streamline the content creation process, and enhance the overall user experience for content editors. Implementing these improvements will allow content editors to seamlessly integrate AI-generated content into their workflow, enabling them to focus on creating engaging and impactful content.

Conclusion

Creating a working prototype in 48 hours at the GoDaddy Generative AI Hackathon demonstrated the benefits of integrating features powered by LLMs into the headless CMS. Our solution addresses key challenges faced by content editors, streamlining workflows, enhancing content quality, and improving productivity. By leveraging the capabilities of headless CMS's App SDK and GoDaddy's internal CaaS, we were able to develop a robust system that automates content migration, generates alt text for images, optimizes SEO fields, and enables direct interaction with LLMs. Our next steps involve refining the system— tackling the intricacies of semantic caching, scheduling content embeddings, and standardizing prompts. We're excited about this journey, exploring the capabilities of AI, and pushing our content creation process to new heights.

This post is also published on GoDaddy's Engineering Blog